Ars Technica received alarming screenshots from a reader on Monday, indicating that ChatGPT had leaked private conversations containing sensitive information, including login credentials and personal details of unrelated users.

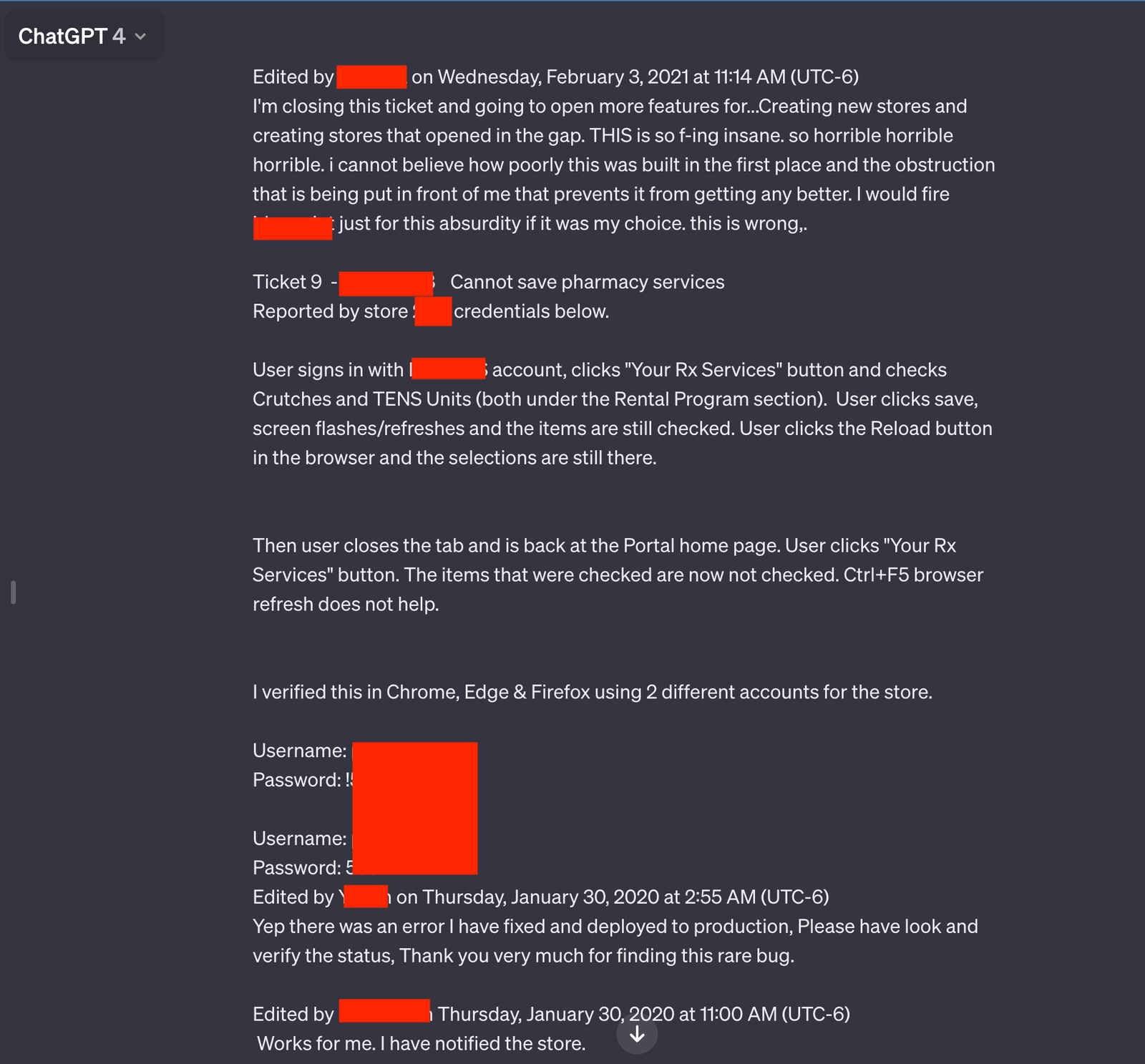

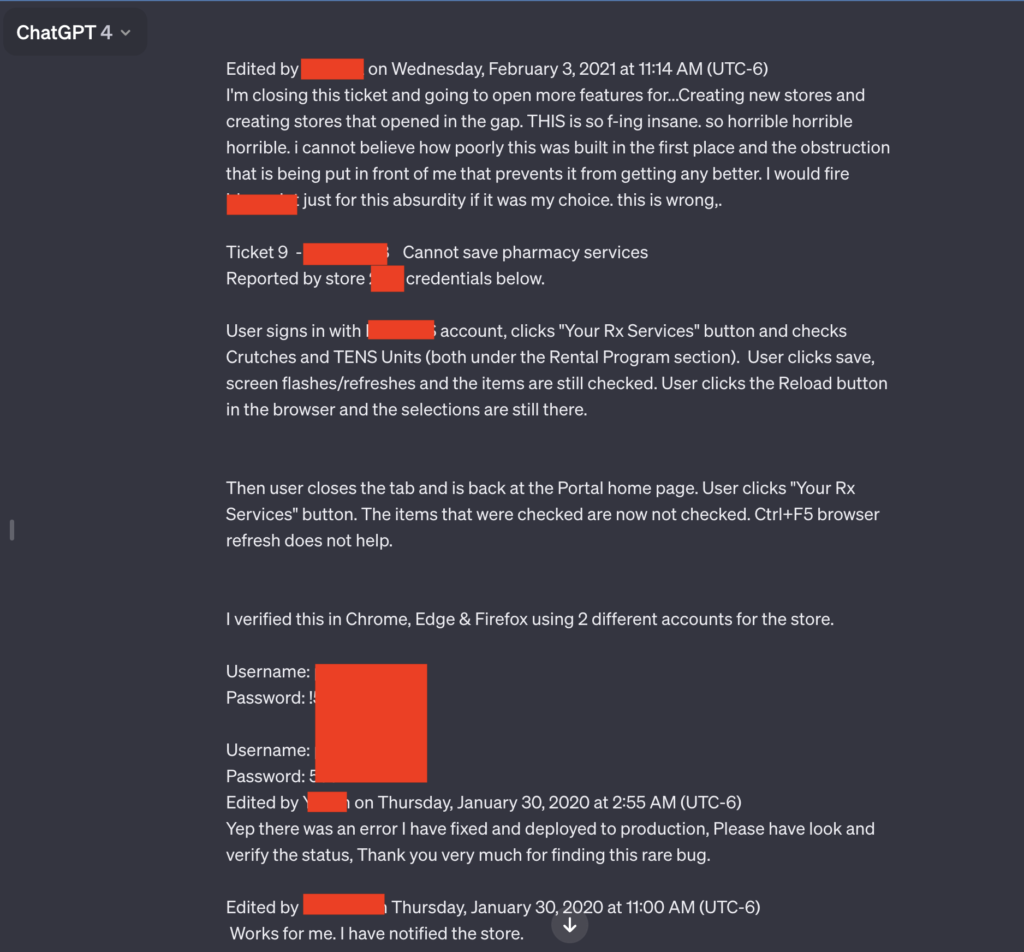

Among the screenshots submitted, two were particularly concerning as they contained multiple pairs of usernames and passwords seemingly linked to a support system utilized by employees of a pharmacy prescription drug portal. These employees were observed using an AI chatbot to troubleshoot issues encountered while utilizing the portal.

The leaked conversation featured candid language expressing frustration with the software’s functionality and obstacles hindering its improvement. The user expressed disbelief at the poor construction of the software and the impediments preventing its enhancement, indicating a desire to terminate the software’s usage due to its deficiencies.

In addition to the revealing language and credentials, the leaked conversation disclosed the name of the app being troubleshot and the store number where the issue occurred. This level of detail heightened concerns regarding the security and privacy implications of the leak.

The full conversation, which extends beyond the redacted screenshot, was accessible via a link provided by the reader. This URL led to additional pairs of credentials being exposed.

The revelation of these leaked conversations occurred shortly after reader Chase Whiteside utilized ChatGPT for an unrelated query. Whiteside noticed the additional conversations in their history upon returning to the platform, despite not initiating any queries related to the leaked content. Whiteside emphasized that the leaked conversations did not originate from them and speculated that they may not have originated from the same user either.

This incident raises significant concerns about the security and privacy safeguards surrounding ChatGPT and highlights the importance of robust measures to protect users’ sensitive information from unauthorized access and disclosure.